- The do/while loop is a variant of the while loop. This loop will execute the code block once, before checking if the condition is true, then it will repeat the loop as long as the condition is true. This loop will execute the code block once, before checking if the condition is true, then it will repeat the loop as long as the condition is true.

- TNW is one of the world's largest online publications that delivers an international perspective on the latest news about Internet technology, business and culture.

- 'Music and the Brain' explores how music impacts brain function and human behavior, including by reducing stress, pain and symptoms of depression as well as improving cognitive and motor skills, spatial-temporal learning and neurogenesis, which is the brain's ability to produce neurons.

STANFORD, Calif. - Using brain images of people listening to short symphonies by an obscure 18th-century composer, a research team from the Stanford University School of Medicine has gained valuable insight into how the brain sorts out the chaotic world around it.

The research team showed that music engages the areas of the brain involved with paying attention, making predictions and updating the event in memory. Peak brain activity occurred during a short period of silence between musical movements - when seemingly nothing was happening.

Beyond understanding the process of listening to music, their work has far-reaching implications for how human brains sort out events in general. Their findings are published in the Aug. 2 issue of Neuron.

This 20-second clip of a subject's fMRI illustrates how cognitive activity increases in anticipation of the transition points between movements.

The researchers attempted to mimic the everyday activity of listening to music, while their subjects were lying prone inside the large, noisy chamber of an MRI machine. Ten men and eight women entered the MRI scanner with noise-reducing headphones, with instructions to simply listen passively to the music.

The researchers caught glimpses of the brain in action using functional magnetic resonance imaging, or fMRI, which gives a dynamic image showing which parts of the brain are working during a given activity. The goal of the study was to look at how the brain sorts out events, but the research also revealed that musical techniques used by composers 200 years ago help the brain organize incoming information.

'In a concert setting, for example, different individuals listen to a piece of music with wandering attention, but at the transition point between movements, their attention is arrested,' said the paper's senior author Vinod Menon, PhD, associate professor of psychiatry and behavioral sciences and of neurosciences.

'I'm not sure if the baroque composers would have thought of it in this way, but certainly from a modern neuroscience perspective, our study shows that this is a moment when individual brains respond in a tightly synchronized manner,' Menon said.

The team used music to help study the brain's attempt to make sense of the continual flow of information the real world generates, a process called event segmentation. The brain partitions information into meaningful chunks by extracting information about beginnings, endings and the boundaries between events.

'These transitions between musical movements offer an ideal setting to study the dynamically changing landscape of activity in the brain during this segmentation process,' said Devarajan Sridharan, a neurosciences graduate student trained in Indian percussion and first author of the article.

No previous study, to the researchers' knowledge, has directly addressed the question of event segmentation in the act of hearing and, specifically, in music. To explore this area, the team chose pieces of music that contained several movements, which are self-contained sections that break a single work into segments. They chose eight symphonies by the English late-baroque period composer William Boyce (1711-79), because his music has a familiar style but is not widely recognized, and it contains several well-defined transitions between relatively short movements.

The study focused on movement transitions - when the music slows down, is punctuated by a brief silence and begins the next movement. These transitions span a few seconds and are obvious to even a non-musician - an aspect critical to their study, which was limited to participants with no formal music training.

Microsoft expressions web 4 tutorial. The researchers attempted to mimic the everyday activity of listening to music, while their subjects were lying prone inside the large, noisy chamber of an MRI machine. Ten men and eight women entered the MRI scanner with noise-reducing headphones, with instructions to simply listen passively to the music.

In the analysis of the participants' brain scans, the researchers focused on a 10-second window before and after the transition between movements. They identified two distinct neural networks involved in processing the movement transition, located in two separate areas of the brain. They found what they called a 'striking' difference between activity levels in the right and left sides of the brain during the entire transition, with the right side significantly more active.

In this foundational study, the researchers conclude that dynamic changes seen in the fMRI scans reflect the brain's evolving responses to different phases of a symphony. An event change - the movement transition signaled by the termination of one movement, a brief pause, followed by the initiation of a new movement - activates the first network, called the ventral fronto-temporal network. Then a second network, the dorsal fronto-parietal network, turns the spotlight of attention to the change and, upon the next event beginning, updates working memory.

'The study suggests one possible adaptive evolutionary purpose of music,' said Jonathan Berger, PhD, associate professor of music and a musician who is another co-author of the study. Music engages the brain over a period of time, he said, and the process of listening to music could be a way that the brain sharpens its ability to anticipate events and sustain attention.

According to the researchers, their findings expand on previous functional brain imaging studies of anticipation, which is at the heart of the musical experience. Even non-musicians are actively engaged, at least subconsciously, in tracking the ongoing development of a musical piece, and forming predictions about what will come next. Typically in music, when something will come next is known, because of the music's underlying pulse or rhythm, but what will occur next is less known, they said.

Having a mismatch between what listeners expect to hear vs. what they actually hear - for example, if an unrelated chord follows an ongoing harmony - triggers similar ventral regions of the brain. Once activated, that region partitions the deviant chord as a different segment with distinct boundaries.

The results of the study 'may put us closer to solving the cocktail party problem - how it is that we are able to follow one conversation in a crowded room of many conversations,' said one of the co-authors, Daniel Levitin, PhD, a music psychologist from McGill University who has written a popular book called This Is Your Brain on Music: The Science of a Human Obsession.

Chris Chafe, PhD, the Duca Family Professor of Music at Stanford, also contributed to this work. This research was supported by grants from the Natural Sciences and Engineering Research Council of Canada, the National Science Foundation, the Ben and A. Jess Shenson Fund, the National Institutes of Health and a Stanford graduate fellowship. The fMRI analysis was performed at the Stanford Cognitive and Systems Neuroscience Laboratory.

- By Mitzi Baker

Stanford Medicine integrates research, medical education and health care at its three institutions - Stanford University School of Medicine, Stanford Health Care (formerly Stanford Hospital & Clinics), and Lucile Packard Children's Hospital Stanford. For more information, please visit the Office of Communication & Public Affairs site at http://mednews.stanford.edu.

JythonMusic is an environment for music making and creative programming. It is meant for musicians and programmers alike, of all levels and backgrounds.

Beyond understanding the process of listening to music, their work has far-reaching implications for how human brains sort out events in general. Their findings are published in the Aug. 2 issue of Neuron.

This 20-second clip of a subject's fMRI illustrates how cognitive activity increases in anticipation of the transition points between movements.

The researchers attempted to mimic the everyday activity of listening to music, while their subjects were lying prone inside the large, noisy chamber of an MRI machine. Ten men and eight women entered the MRI scanner with noise-reducing headphones, with instructions to simply listen passively to the music.

The researchers caught glimpses of the brain in action using functional magnetic resonance imaging, or fMRI, which gives a dynamic image showing which parts of the brain are working during a given activity. The goal of the study was to look at how the brain sorts out events, but the research also revealed that musical techniques used by composers 200 years ago help the brain organize incoming information.

'In a concert setting, for example, different individuals listen to a piece of music with wandering attention, but at the transition point between movements, their attention is arrested,' said the paper's senior author Vinod Menon, PhD, associate professor of psychiatry and behavioral sciences and of neurosciences.

'I'm not sure if the baroque composers would have thought of it in this way, but certainly from a modern neuroscience perspective, our study shows that this is a moment when individual brains respond in a tightly synchronized manner,' Menon said.

The team used music to help study the brain's attempt to make sense of the continual flow of information the real world generates, a process called event segmentation. The brain partitions information into meaningful chunks by extracting information about beginnings, endings and the boundaries between events.

'These transitions between musical movements offer an ideal setting to study the dynamically changing landscape of activity in the brain during this segmentation process,' said Devarajan Sridharan, a neurosciences graduate student trained in Indian percussion and first author of the article.

No previous study, to the researchers' knowledge, has directly addressed the question of event segmentation in the act of hearing and, specifically, in music. To explore this area, the team chose pieces of music that contained several movements, which are self-contained sections that break a single work into segments. They chose eight symphonies by the English late-baroque period composer William Boyce (1711-79), because his music has a familiar style but is not widely recognized, and it contains several well-defined transitions between relatively short movements.

The study focused on movement transitions - when the music slows down, is punctuated by a brief silence and begins the next movement. These transitions span a few seconds and are obvious to even a non-musician - an aspect critical to their study, which was limited to participants with no formal music training.

Microsoft expressions web 4 tutorial. The researchers attempted to mimic the everyday activity of listening to music, while their subjects were lying prone inside the large, noisy chamber of an MRI machine. Ten men and eight women entered the MRI scanner with noise-reducing headphones, with instructions to simply listen passively to the music.

In the analysis of the participants' brain scans, the researchers focused on a 10-second window before and after the transition between movements. They identified two distinct neural networks involved in processing the movement transition, located in two separate areas of the brain. They found what they called a 'striking' difference between activity levels in the right and left sides of the brain during the entire transition, with the right side significantly more active.

In this foundational study, the researchers conclude that dynamic changes seen in the fMRI scans reflect the brain's evolving responses to different phases of a symphony. An event change - the movement transition signaled by the termination of one movement, a brief pause, followed by the initiation of a new movement - activates the first network, called the ventral fronto-temporal network. Then a second network, the dorsal fronto-parietal network, turns the spotlight of attention to the change and, upon the next event beginning, updates working memory.

'The study suggests one possible adaptive evolutionary purpose of music,' said Jonathan Berger, PhD, associate professor of music and a musician who is another co-author of the study. Music engages the brain over a period of time, he said, and the process of listening to music could be a way that the brain sharpens its ability to anticipate events and sustain attention.

According to the researchers, their findings expand on previous functional brain imaging studies of anticipation, which is at the heart of the musical experience. Even non-musicians are actively engaged, at least subconsciously, in tracking the ongoing development of a musical piece, and forming predictions about what will come next. Typically in music, when something will come next is known, because of the music's underlying pulse or rhythm, but what will occur next is less known, they said.

Having a mismatch between what listeners expect to hear vs. what they actually hear - for example, if an unrelated chord follows an ongoing harmony - triggers similar ventral regions of the brain. Once activated, that region partitions the deviant chord as a different segment with distinct boundaries.

The results of the study 'may put us closer to solving the cocktail party problem - how it is that we are able to follow one conversation in a crowded room of many conversations,' said one of the co-authors, Daniel Levitin, PhD, a music psychologist from McGill University who has written a popular book called This Is Your Brain on Music: The Science of a Human Obsession.

Chris Chafe, PhD, the Duca Family Professor of Music at Stanford, also contributed to this work. This research was supported by grants from the Natural Sciences and Engineering Research Council of Canada, the National Science Foundation, the Ben and A. Jess Shenson Fund, the National Institutes of Health and a Stanford graduate fellowship. The fMRI analysis was performed at the Stanford Cognitive and Systems Neuroscience Laboratory.

- By Mitzi Baker

Stanford Medicine integrates research, medical education and health care at its three institutions - Stanford University School of Medicine, Stanford Health Care (formerly Stanford Hospital & Clinics), and Lucile Packard Children's Hospital Stanford. For more information, please visit the Office of Communication & Public Affairs site at http://mednews.stanford.edu.

JythonMusic is an environment for music making and creative programming. It is meant for musicians and programmers alike, of all levels and backgrounds.

JythonMusic provides composers and software developers with libraries for music making, image manipulation, building graphical user interfaces, and connecting to external devices, such as digital pianos, smartphones, and tablets.

JythonMusic is based on Python programming. It is easy to learn for beginners, and powerful enough for experts.

Used in education

JythonMusic is used in computer programming classes combining music and art. Here is a first-year university class performing Terry Riley's 'In C'.

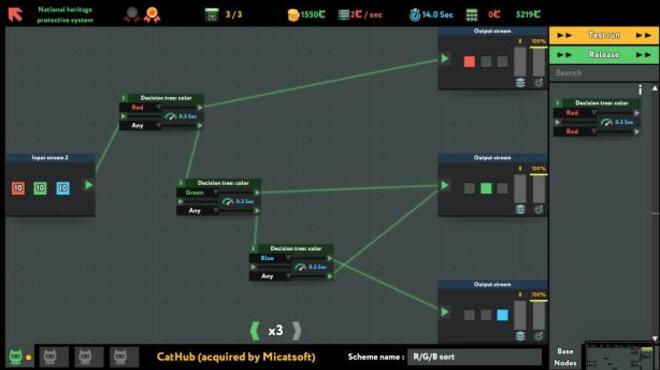

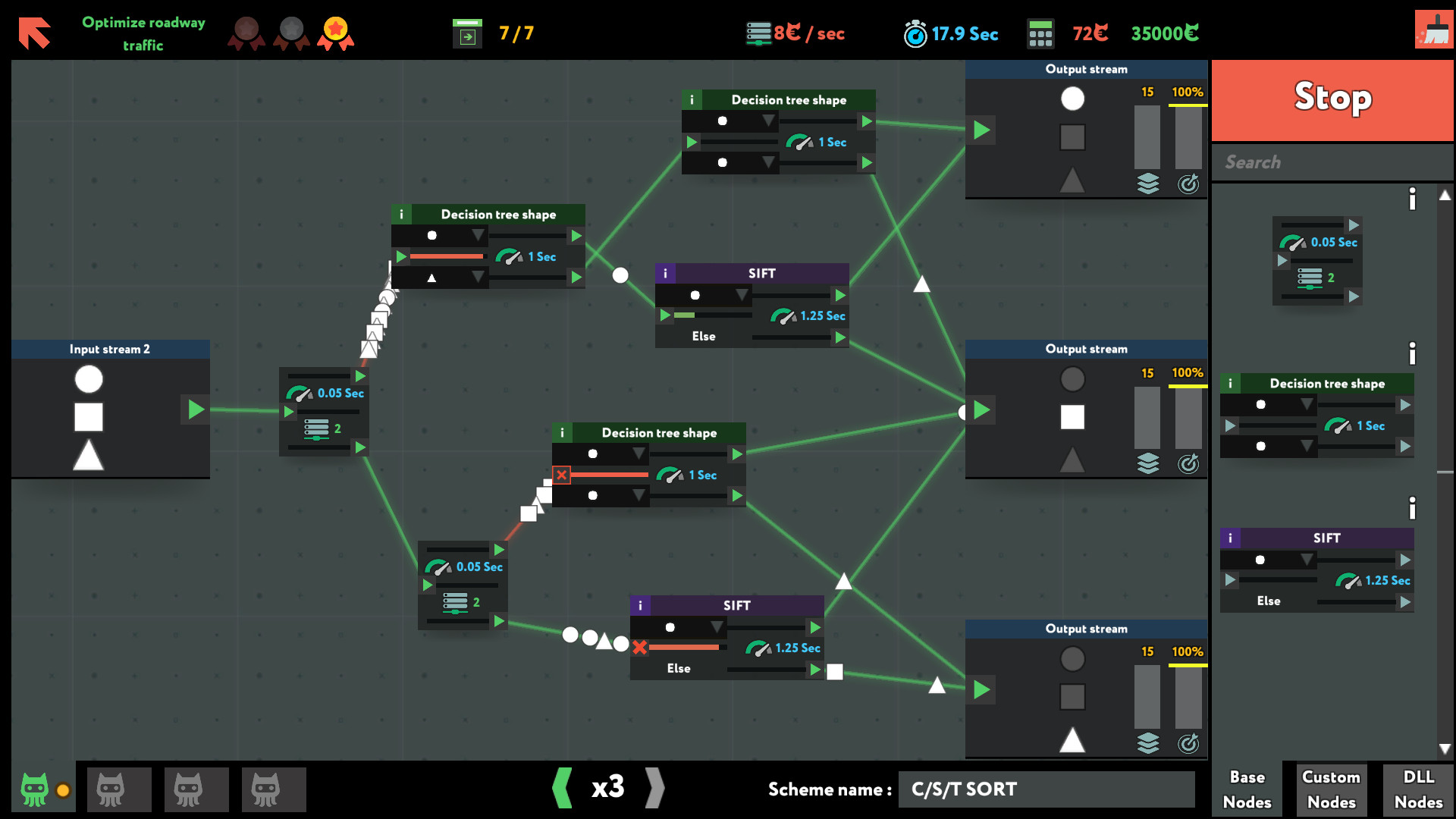

While True: Learn() Soundtrack Crack Torrent

Used in music

JythonMusic supports musicians with its familiar music data structure based upon note/sound events, and provides methods for organizing, manipulating and analyzing such musical data. JythonMusic scores can be played back in real-time, rendered as MIDI or XML, and drive external synthesizers and DAWs (e.g., Ableton, LogicPro, and PureData).

JythonMusic can connect to external MIDI controllers and OSC devices (e.g., smartphones and tablets) for musical or other purposes. Here is an interface utilizing Myo armbands and PureData to control a performance half-way around the world.

While True Learn

Used in art

JythonMusic is used in art projects. It works well with other tools, like MIT Processing and PureData. Here is an interactive multimedia art installation developed using JythonMusic.

Used in research

JythonMusic is designed to be extendible, encouraging you to build upon its functionality by programming in Jython to create your own musical compositions, tools, and instruments. Here is a hyperinstrument consisting of guitar and computer, for a research project in computer-aided music composition.

It is free

JythonMusic is free, in the spirit of other tools, like PureData and MIT Processing. It is an open source project.

JythonMusic is 100% Jython and works on Windows, Mac OS, Linux, or any other platform with Java support.

It comes with a textbook

JythonMusic comes with a textbook. The textbook is intended for

- students in computing in the arts, or music technology courses

- musicians, who are beginning programmers, to learn Python in a musical way

- programmers, who are beginning musicians, to learn essential music concepts in a programming way

- musician programmers (or programmer musicians), who seek inspiration, and a new, comprehensive way to interweave music composition, music performance, and computing.

For more information, see

- B. Manaris and A. Brown, Making Music with Computers: Creative Programming in Python, Chapman & Hall/CRC Textbooks in Computing, May 2014. (see Amazon, and CRC Press links)

- B. Manaris, B. Stevens, and A.R. Brown, 'JythonMusic: An environment for teaching algorithmic music composition, dynamic coding and musical performativity', Journal of Music, Technology & Education, 9: 1, pp. 33–56, May 2016. (doi: 10.1386/jmte.9.1.33_1)

This material supports the AP Computer Science Principles Curriculum.

While True Learn Walkthrough

Credits

JythonMusic is developed by Bill Manaris, Kenneth Hanson, Dana Hughes, David Johnson, Seth Stoudenmier, Christopher Benson, Margaret Marshall, and William Blanchett.

JythonMusic is based on the jMusic computer-assisted composition framework, created by Andrew Brown and Andrew Sorensen.

The JEM editor is based on the TigerJython editor developed by Tobias Kohn.

JythonMusic also incorporates the jSyn synthesizer by Phil Burk, and other cross-platform programming tools.

Various components have been supported by the US National Science Foundation (DUE-1323605, DUE-1044861, IIS-0736480, IIS-0849499 and IIS-1049554).